DISCLOSURE: This post may contain affiliate links, meaning when you click the links and make a purchase, we receive a commission.

When it comes to creating ESXi home labs, you could face a lot of difficulties. However, one of the most common issues you will face is to get an adequate number of network cards. By adding more cards, you can separate the network traffics into proper virtual LANs.

The problem is that normal consumer level hardware doesn’t come with 2 NICs. Of course, you can add extra NICs but it will take up the extra slots for other equipment. That is really a critical issue.

Fortunately, there is a solution to this. You can use PCI-X Cards as a solution to this. Why though? Because it is kindly similar to using 2- PCI NICs cards on the motherboard. And, we would recommend using the Intel Pro/1000 Dual Gigabit NIC PCI-X card.

By doing this, you are not only solving a big problem but also enhancing the opportunity of sticking more cards on the rest of the PCI slots. Besides that, you are getting the cards for cheap too as it works better than two NICs.

Intel Pro/1000 Dual Gigabit NIC PCI-X Card in PCI Slot

More details on ESXi Networking Configuration:

Is this a good idea to use 4 NICs per host? Normally, in current business environments, you will find typically 4 GB NICs. And 4 10 GB NICs is typically allowed on each host in normal server environments. So, yes, you can use 4 NICs or more per host (depending on the memory).

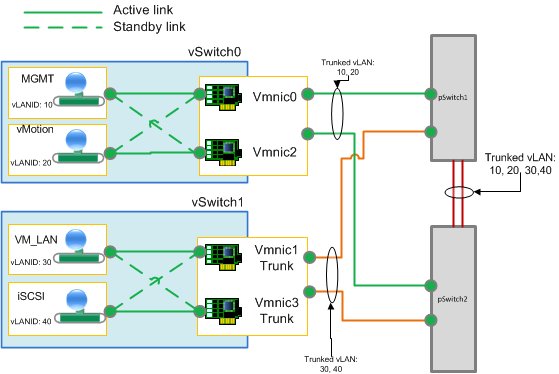

And, you will usually see that people are using as much as NICs as possible. The reason behind this is that it will help you to separate your storage, management, vMotion, Fault Tolerance, and VM Traffic into appropriate virtual LANs (VLANs). It will also help you to replicate a production environment. As a result, you can learn more about VLANs and networking.

Now, in this image below, you can see the setup for 4 NICs.

You will have to use two physical switches for this setup. And the switches should be VLAN aware switches. So that you can use smart switches or managed switches. However, we would recommend using smart switches which are cheaper than managed switches. And, whether you want a 24 port or 16 port switch, it totally depends upon your preference.

So what about PCI vs. PCI-X:

PCI or Peripheral Component Interface is used for attaching internal components to a computer. Let’s talk about PCI’s history for a little bit now. At first, the PCI v1.0 was the first PCI. It used to use 5 volts and would run at 33 MHz.

Then the second version of PCI came out which would run at 66 MHz on 3.3 volts. This was enough for normal networking purposes. Yet, a 64-bit PCI slot also came into the play for high-end networking. Although, in modern days, normal consumer level motherboards come with 32-bit slots which use 3.3 V.

Now, the PCI-X (Peripheral Component Interconnect eXtended) is a newer version which also runs on a 64-bit slot. However, the latest version of PCI-X which is known as the PCI-X v2.0 which supports clock speeds up to 533 MHz. And, you can use the PCI-X v2.0 on any normal 32/64 bit slot. However, the card is slightly longer.

So, it will still fit but the back of the card will be hanging out a bit. Another thing, if you see that your card doesn’t fit on your slot, it might be because your card is PCI-X 5V. These cards come with a tab configuration so that you won’t be able to fit it into a 3.3V slot. So, it is clear that PCI-X is better than PCI in every way.

So what about the performance?

You can calculate the PCI bus bandwidth of your card. The formula is: Frequency x Bit-width = Bandwidth. Now, you have to remember that the consumer level motherboards use the 32-bit slots. Here are the bandwidths of different PCI buses:

- PCI 32-bit, 66 MHz: 266 MB/s

- PCI 32-bit, 33 MHz: 1067 Mbit/s or 133.33 MB/s

- PCI 64-bit, 66 MHz: 533 MB/s

- PCI 64-bit, 33 MHz: 266 MB/s

For comparison purposes, we will now share some other data bandwidths down below:

- Gig-E (1000base-X): 125 MB/s

- Fast Ethernet (100base-X): 11.6 MB/s

- SATA 3 (SATA-600): 600 MB/s

- SATA 2 (SATA-300): 300 MB/s

- SATA 1 (SATA-150): 150 MB/s

Thus, we can tell you that a dual Gigabit NIC PCI-X card on a 32-bit slot running at 66 MHz would be capable of maxing out both NICs running at full speed. Now, it is theoretical. Because it is very rare to see that your home lab would need the maximum output from your network cards.

Final Words:

So, if your question was to use a PCI-X card in the PCI slot of your motherboard, then the answer is ‘Yeah, you can do that’. The best thing about that, you are getting better performance as well as you are also getting a few empty slots which can be used for other purposes like adding an additional graphics card. This is actually a double-edged sword.

Reference: Donald Fountain, TheHomeServerBlog